Recent Posts

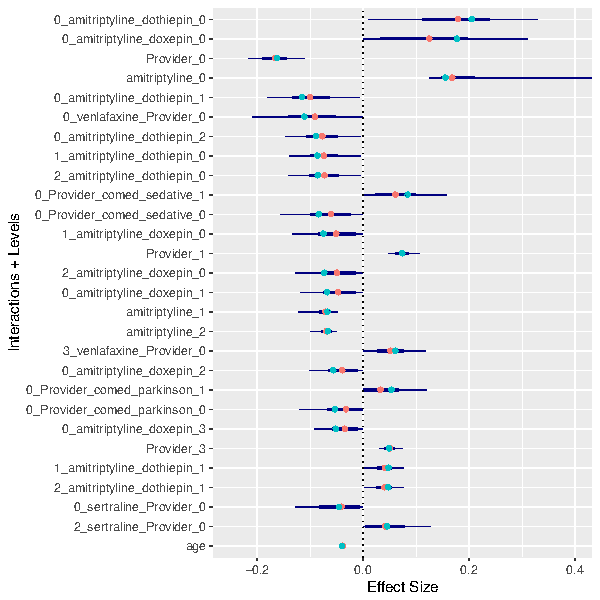

Add Interactions to Regularized Regression

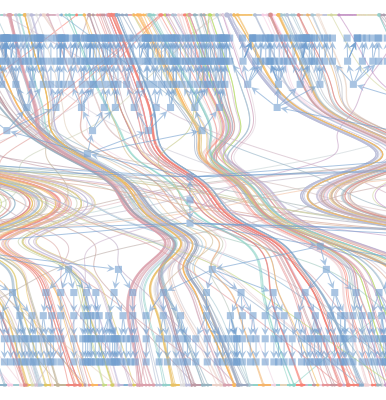

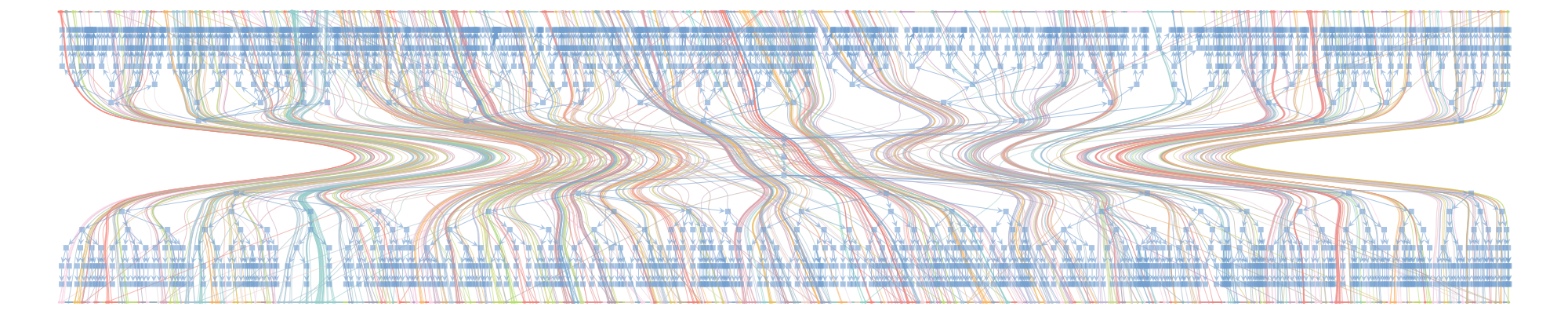

Big Networks in Healthcare

NUS-MIT Datathon

PhD internship in Machine Learning

In the internship, you’ll explore the latest machine learning methods such as tree ensemble methods, graphical models and neural networks and compare their performance to what is the current industry standard. A great opportunity to improve your machine learning skills and create valuable insights for the credit risk industry.

Applications close 20 June, 2018.

Apply here: https://aprintern.org.au/2018/05/21/int-0431/

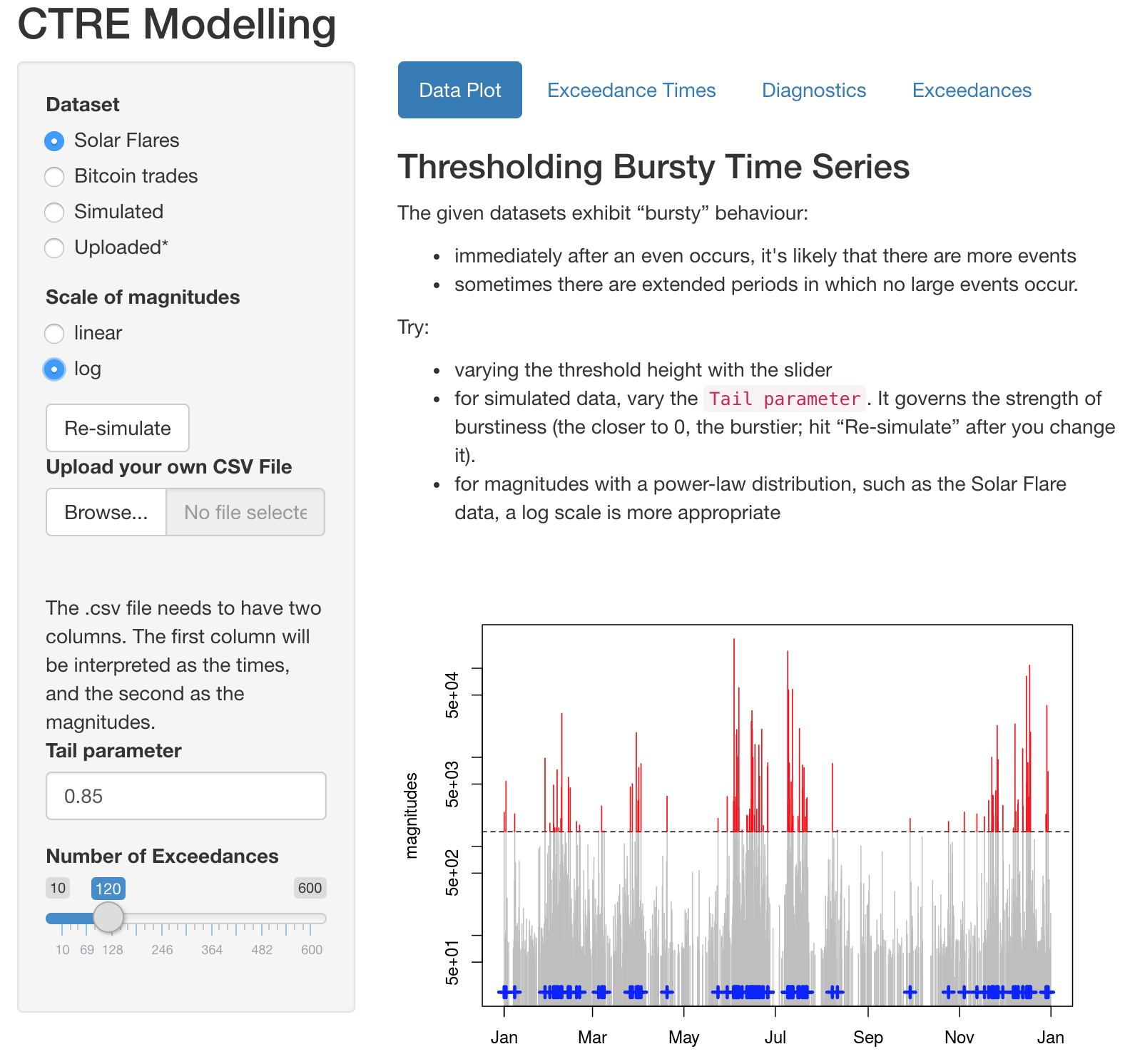

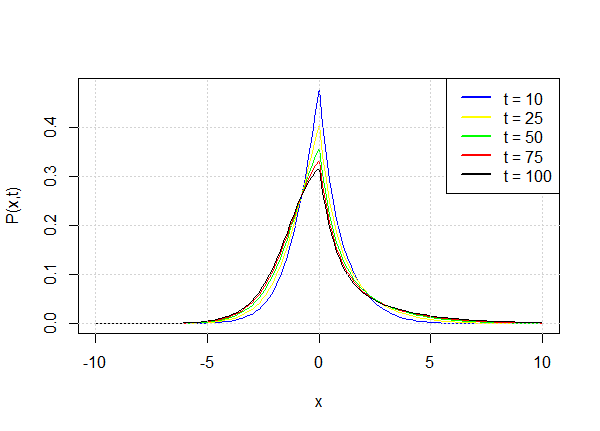

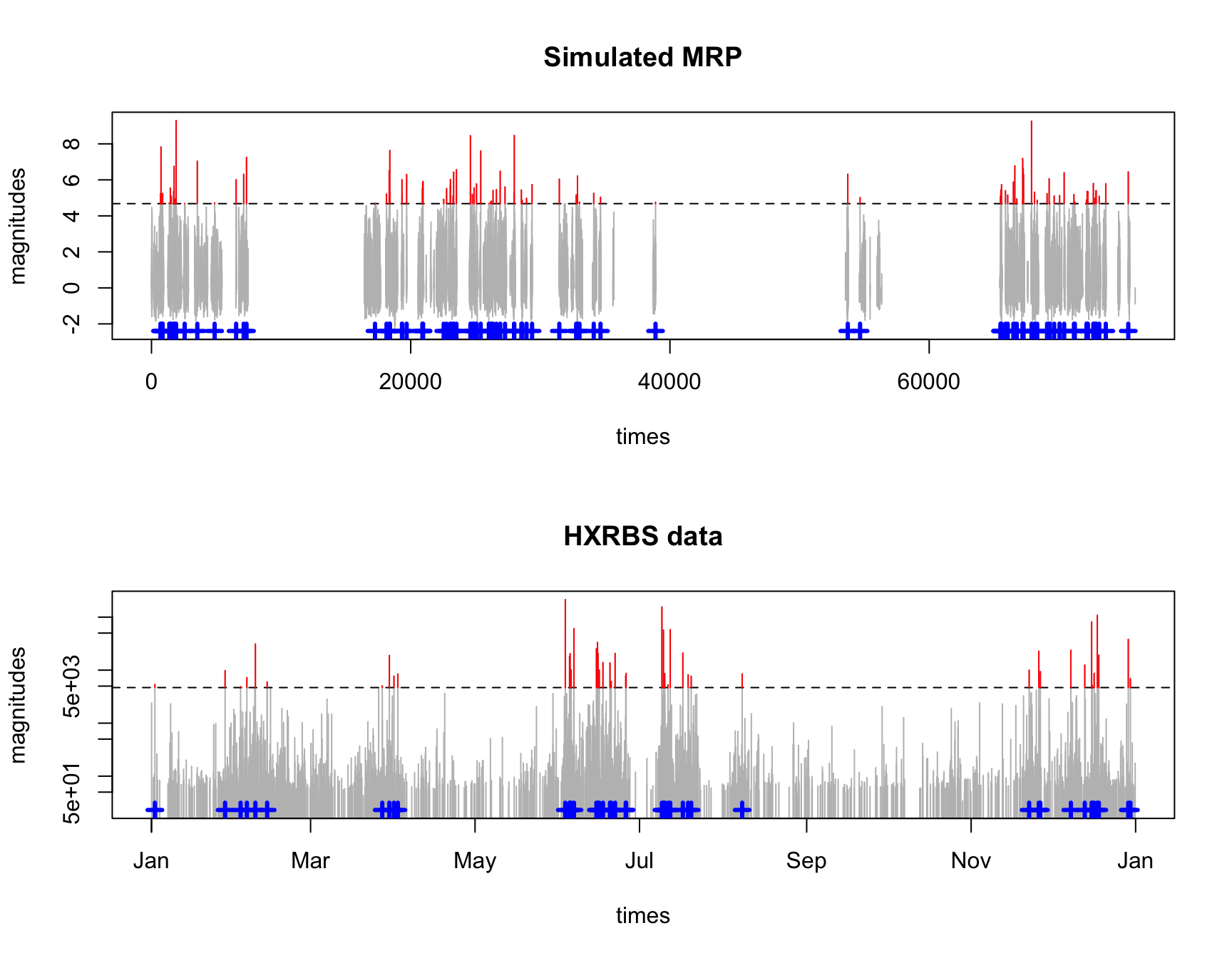

R package CTRE: thresholding bursty time series

The R package is now available on CRAN. It Models extremes of ‘bursty’ time series via Continuous Time Random Exceedances (CTRE). (See companion paper.)